Kay Dean: Fighting the Scourge of Fake Online Reviews

Nine in 10 internet users rely on online reviews before making purchases today—but two-thirds of...

Hany Farid, a professor at the University of California, Berkeley, is one of the world's foremost experts on the analysis of digital images, including deep fakes and other forms of manipulation. He has been called the "father" of digital forensics, has spoken before Congress, and has earned numerous fellowships and awards for his trailblazing work in this field.

I first corresponded with Hany more than a decade ago, when I was researching an ebook for Black Star Publishing called "Photojournalism, Technology and Ethics," which was released in 2012. The acceleration in the sophistication and ubiquity of manipulated and synthesized images since my first contact with Hany has been mind-boggling.

I have long followed Hany's work with fascination on LinkedIn, and I was particularly struck by his most recent study with Sophie J. Nightingale, published in the Proceedings of the National Academy of Sciences USA.

The study's abstract shares its startling results on AI and trust:

Artificial intelligence (AI)–synthesized text, audio, image, and video are being weaponized for the purposes of non-consensual intimate imagery, financial fraud, and disinformation campaigns. Our evaluation of the photorealism of AI-synthesized faces indicates that synthesis engines have passed through the uncanny valley and are capable of creating faces that are indistinguishable—and more trustworthy—than real faces.

Doubting these results? Then here's a quick quiz for you.

Is the person below real or fake?

If you said "fake," you're right.

But 87% of participants in the study thought she was real.

In an email interview, I asked Farid the implications of his study for marketers, Big Tech, and our society as a whole. Here is that discussion.

Q: Hany, you have been a leader in studying the phenomenon of deep fakes for years now. What was the impetus for this PNAS study?

A: Because we focus on developing computational techniques for detecting manipulated or fake images, audio, and video, it is natural to periodically ask how realistic different manipulation techniques are to help us evaluate the level of risk posed by different manipulation techniques.

In this study, we focused on the rapidly evolving field of AI-synthesis (aka deep fakes).

Q: You created the fake faces for your study with a machine-learning model called GANs. How do GANs work?

A: Generative adversarial networks (GANs) are popular mechanisms for synthesizing content. A GAN pits two neural networks—a generator and discriminator—against each other. To synthesize an image of a fictional person, the generator starts with a random array of pixels and iteratively learns to synthesize a realistic face. On each iteration, the discriminator learns to distinguish the synthesized face from a corpus of real faces; if the synthesized face is distinguishable from the real faces, then the discriminator penalizes the generator.

Over multiple iterations, the generator learns to synthesize increasingly more realistic faces until the discriminator is unable to distinguish them from real faces.

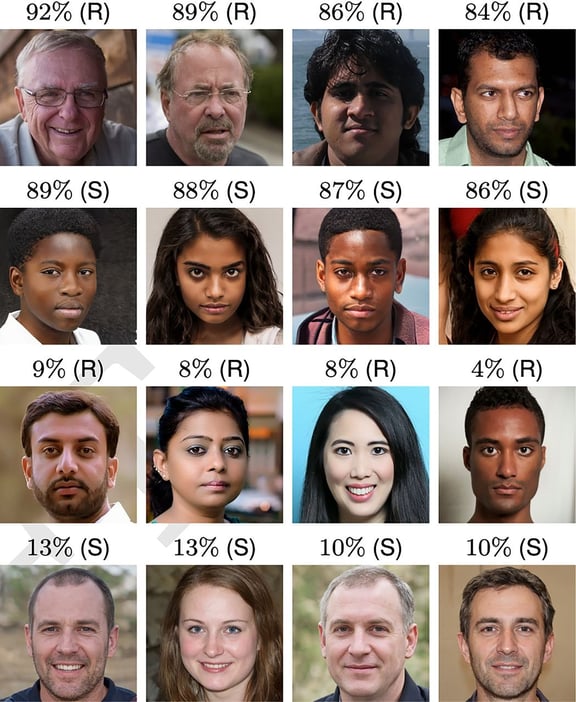

In the image above, the faces marked (R) are real and those marked (S) are synthetic. The number indicates the percentage of study participants who correctly categorized each face.

Q: I know there is a science to what people find attractive in a human face. For example, people find symmetry in features more attractive than asymmetry. Is there also a science behind what facial features we find trustworthy vs untrustworthy—and if so, was this factored into the images created for the study?

A: We did not factor in any criteria other than ensuring diversity across gender, race, and age. The fact that our study participants found synthetic faces more trustworthy was an organic result.

While we don’t specifically know why the synthetic faces are rated more trustworthy, we hypothesize that this is because synthesized faces tend to look more like average faces — because of an artifact of how GANs generate faces — which themselves are deemed more trustworthy.

Q: Following up on this point, if there are facial features that convey trustworthiness, shouldn’t we assume that in time we will master the creation of synthetic images that perfect the aspects of the human face that establish trust? And if so, should we assume it’s inevitable that in time, synthetic images will always be perceived as more trustworthy than real faces, in the same way that the best computers today can always beat the top chess grandmasters?

A: It is reasonable to assume that as the synthesis techniques improve, synthetic faces (and eventually voices and videos) will continue to improve in realism and whatever other features the creators want, including trustworthiness and attractiveness.

Q: How optimistic are you that detection tools will be able to keep up with deep fakes or synthetic images passed off as real? Are we fighting a losing battle or is there real hope for detection technology to get ahead of this trend?

A: We are fighting a losing battle, particularly when you consider the scale at which the internet operates with billions of daily uploads. It is almost certainly the case that forensic techniques will not be able to keep up with this cat and mouse game.

There is, however, an alternative way to think about how to regain trust online. Instead of relying on authentication after the fact (when content is uploaded), there are emerging control-capture technologies that use the native camera app to authenticate content at the point of recording (see, for example, Truepic).

A device-side authentication certificate is then attached to the image, audio, or video, for downstream authentication.

Q: Big tech companies (Google, Amazon, Microsoft, Apple, Facebook) have been criticized for not doing enough to keep fake news, including deep fake images and video, off their platforms. How can these companies do a better job in the future, considering the job of policing images is becoming more challenging every day?

A: Control-capture technology can be a powerful tool in countering misinformation. However, larger changes by the titans of tech are needed.

In particular, these services have proven that they are more interested in growth and profit than in ensuring that their services don’t lead to harm to individuals, societies, and democracies.

The time has come for our legislators to consider enacting laws and regulations to rein in the most egregious online abuses.

Q: What should the role of government be in fighting the use of synthetic images for nefarious purposes?

A: When it comes to things like non-consensual pornography, fraud, election interference, and dangerous disinformation campaigns designed to, for example, disrupt an election, I believe that the government has a role.

Any intervention, however, has to be careful to balance our desire for an open exchange of ideas while ensuring the safety of individuals and societies.

Q: The Trust Signals blog has an audience primarily of marketers. What trends should they be most attuned to that might affect their work? I saw recently, for example, that stock photo platforms have begun offering images in which the subjects are not real.

A: Individualized and customizable synthetic images, audio, and videos are coming. There are many interesting applications of these technologies for marketers to interact with customers.

There are, however, also some complex ethical questions that will arise. For example, should a marketer be required to disclose that a customer is talking with a synthetic person?

Q: What are the risks presented to brands by fake images, and how can they deal with these risks proactively?

A: Disinformation campaigns fueled by deep fakes are a major threat to individuals and brands. Organizations can use control-capture technology in all of their external communications so that when content does surface online without the appropriate authentication, it can be more readily debunked.

Q: What will be the most important impacts of the trends you have identified on the average American consumer?

A: It is becoming increasingly more difficult to believe what we see, hear, and read online. This is not, in my opinion, a particularly healthy online ecosystem.

A combination of technology, policy, law, and education are needed to move us out of the mess that is the internet today and towards a more healthy, civil, and honest online space.

Scott is founder and CEO of Idea Grove, one of the most forward-looking public relations agencies in the United States. Idea Grove focuses on helping technology companies reach media and buyers, with clients ranging from venture-backed startups to Fortune 100 companies.

Nine in 10 internet users rely on online reviews before making purchases today—but two-thirds of...

Everybody's talking about brand trust these days, and for good reason. Buyer research shows that...

Leave a Comment